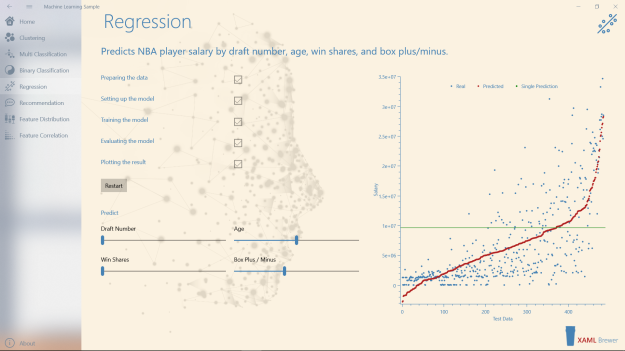

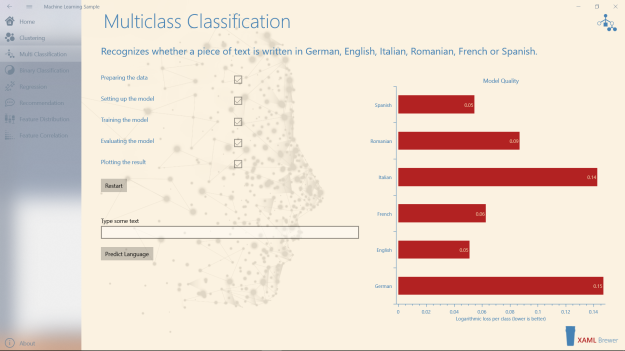

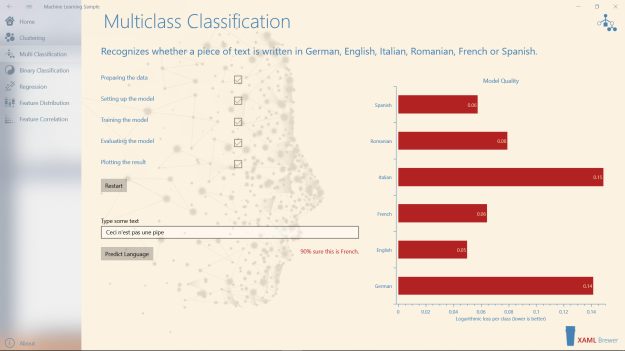

In this article we will use ML.NET to build and compare four Machine Learning Binary Classification pipelines. Each model uses another algorithm to predict the quality of wine from 11 physicochemical features. The characteristics of the prediction models are visualized using OxyPlot. All the code is in C# (“Look mom, no Python!”) and hosted in a UWP app together with some other ML.NET use cases.

Here’s how the Binary Classification sample page looks like. It displays the quality metrics of each model, and a selection of wines on which the models disagree:

This Binary Classification sample evolved from a copy of the code and the datasets from this article on Rubik’s Code.

In the second part of this article we will focus on model evaluation and pipeline customization. We will move rapidly through the basic steps to implement a typical Machine Learning use case in ML.NET. If you want more details on each of the steps, please (re-)visit the previous articles in this series.

In the first part of this article we try to provide some relevant technical background (“Machine Learning for Developers”).

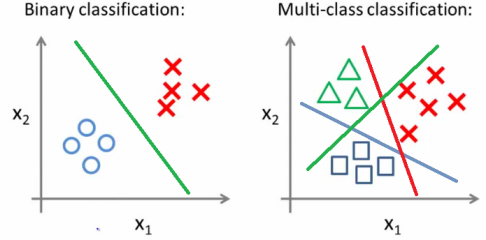

Binary Classification

Binary Classification is using a classification rule to place the elements of a given set into two groups, or to predict which group each element belongs to. In Machine Learning, Binary Classification is a part of supervised learning, which means that the classifier requires labeled (rated) samples for training and evaluation.

Math, science, decision trees, unraveling the mysteries

Binary Classification boils down to the universal problem of separating the good from the bad. It should not come as a surprise that very diverse algorithms exist, originating from very diverse domains (mathematics, probability theory, biology, operations research). Here’s a small list of Binary Classification algorithms:

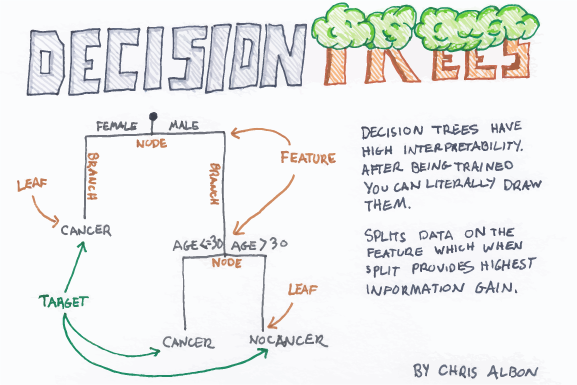

- Decision trees

- Random forests

- Bayesian networks

- Support vector machines

- Neural networks

- Logistic regression

- Probit model

These algorithms make different assumptions on your data and its distribution, have different performance in training and inferencing, and have different configuration options (model parameters). Fortunately there are some good resources available that help you determine the appropriate model for your Machine Learning problem, like this ‘How to choose algorithms for Azure Machine Learning Studio’. Here’s a relevant table from this article. It compares some Binary Classification models:

If you’re more into diagrams, there’s an excellent graphical cheat sheet right here. Here’s its binary classification overview:

ML.NET has implementations for most binary classification algorithms, but recommends the following trainers:

- AveragedPerceptronTrainer

- StochasticGradientDescentClassificationTrainer

- LightGbmBinaryTrainer

- FastTreeBinaryClassificationTrainer

- SymSgdClassificationTrainer

The BinaryClassificationCatalog has more members than this. Here are all the current trainer classes:

- AveragedPerceptronTrainer

- BinaryClassificationGamTrainer

- FastForestClassification

- FastTreeBinaryClassificationTrainer

- FieldAwareFactorizationMachineTrainer

- LightGbmBinaryTrainer

- LinearSvmTrainer

- LogisticRegression

- PriorTrainer

- RandomTrainer (removed: https://github.com/dotnet/machinelearning/pull/2849)

- StochasticGradientDescentClassificationTrainer

- SymSgdClassificationTrainer

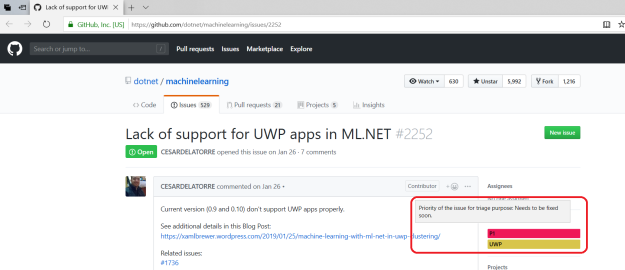

We will not compare all of these algorithms in this article, just a representative set. The choice was limited by the hosting technology: some algorithms still have some known compatibility glitches with UWP.

Here’s some background on the contestants in our comparison:

Linear Svm

Linear SVM has its roots in mathematics. A Support Vector Machine (SVM) places the training data as a p-dimensional vector (a list of p numbers) in space, and then calculates a (p-1)-dimensional hyperplane so that the examples of the separate categories are divided by a clear gap that is as wide as possible. New examples are then mapped into that same space and predicted to belong to a category based on which side of the gap they fall.

In two-dimensional space the hyperplane is just a line dividing a plane in two parts -one for each class- like in this illustration from Chris Albon:

Linear SVM tries to create a hyperplane with all positive samples on one side and all negative samples on the other. Whether that works and how hard this is, depends on the data set. When the data are not linearly separable, a hinge loss function is introduced to represent the price paid for inaccurate predictions. The configuration of the model will try to minimize this function.

Linear SVM is a workhorse, simple and fast, but it may be overly simplistic for some problems. For a deeper dive into SVM, check this article.

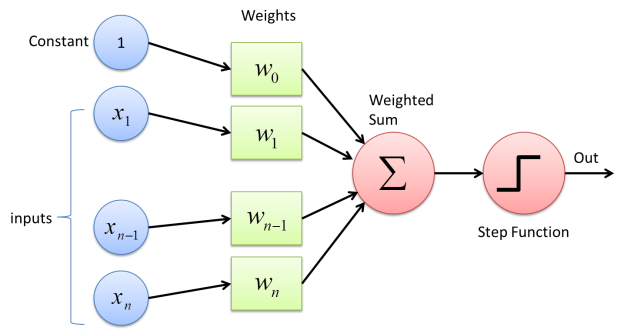

Perceptron

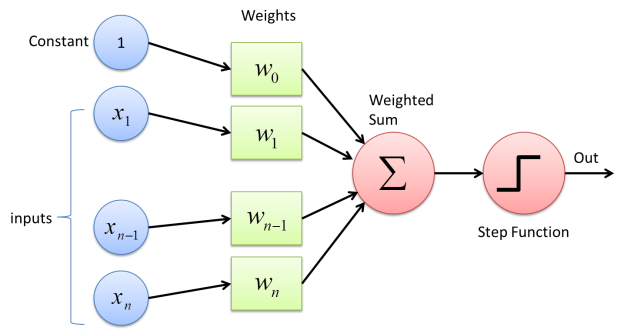

The perceptron algorithm is inspired by how a brain works, and hence has its origin in biology. Perceptron can be considered as a single artificial neuron that gets an input signal for each feature, with the strength of the signal being the feature value. During training, the perceptron learns the weights for the features and stores these in its activation function. If the weighted sum of the feature values passes a threshold value, the neuron ‘fires’ and the predicted result is positive. Here’s how this looks like in an image, from “What the Hell is Perceptron”:

The learning happens one example at a time and with multiple iterations over the dataset, so you may expect long training times over larger datasets. Just like SVM, the perceptron is a linear classifier, so it will make mistakes if the data is not linearly separable. There’s an excellent deeper dive into perceptron right here.

Logistic Regression

Logistic Regression has its origin in statistics. Despite the ‘regression’ in its name (regression is predicting a continuous value) it is actually a powerful tool for two-class and multiclass classification. That is because Logistic Regression predicts a probability (“the likelihood”) – a continuous value between 0 and 1 that can easily be mapped to a class or a Boolean.

Logistic regression is not a linear classifier. ‘Logistic’ refers to a specific S-shaped curve. This logistic curve is almost linear at both extremes, and exponential in the middle. This makes it a natural fit for dividing data into groups (image from “Understanding Logistic Regression in Python”):

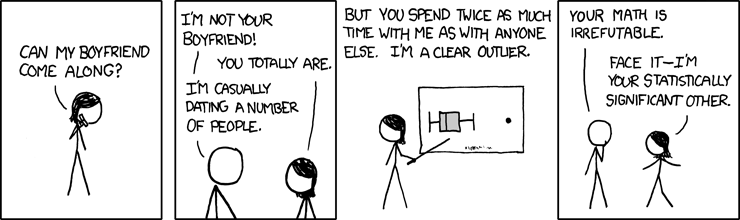

Logistic Regression does not work well on data that has much outliers, or when there is high correlation between the features. Check our articles on correlation analysis and distribution analysis on how to detect and avoid these. For more details on Logistic Regression, check these course notes.

Stochastic Dual Coordinate Ascent

Stochastic Dual Coordinate Ascent (SDCA) is an algorithm for large-scale supervised learning that has its origins in mathematical programming – the science of finding the best element with regard to some criteria from a set of available alternatives. Most Machine Learning algorithms try to learn their model’s parameters by minimizing some kind of loss function, such as ordinary least squares in linear regression or the already mentioned hinge loss function. When the training set become bigger and no simple formulas exist, solving the parameters analytically (with closed-form equations) becomes economically impractical. In those cases it makes more sense to use an optimization algorithm like Coordinate Descent or Gradient Descent. Such algorithms bring you ‘close enough’ to the mathematical solution in a number of steps that each do small adjustments on the parameters. When even these calculations are too expensive, you can try reversing the problem by exploiting the duality gap (“the minimum of a curve is close enough to the maximum not on the curve”). In a nutshell, that’s what SDCA does: it iteratively reads one random (stochastic) sample from the training set and updates the model parameters, until the duality gap is sufficiently small.

Microsoft’s version of SDCA is optimized for large out-of-memory datasets and parallelism.

Decision trees

We regret that there is no Decision Tree based algorithm in our sample app. The implementations of this family of algorithms in ML.NET are currently broken for UWP.

Metrics Reloaded

Evaluating the prediction model is an essential part of any Machine Learning project. There are many metrics available to assess the quality of a model and/or compare it to another model. Most of these metrics are built around the confusion matrix which describes the performance of the model. For a two-class problem this matrix looks like this (image from Yann Dubois’ awesome Machine Learning glossary):

The confusion matrix holds the number of

- True Positives: The cases in which the model predicted YES and the actual output was also YES,

- True Negatives: The cases in which the model predicted NO and the actual output was NO,

- False Positives: The cases in which the model predicted YES and the actual output was NO, and

- False Negatives: The cases in which the model predicted NO and the actual output was YES.

Here are some common metrics for the evaluation of binary classifiers (illustrations by Chris Albon):

Accuracy

Accuracy is the ratio of the number of correct predictions to the total number of input samples.

Formula: (TP + TN) / (TP + FP + TN + FN)

Accuracy is the most common model quality metric, but it’s not always useful. For a highly unbalanced distribution and/or when the cost of making a mistake is high, accuracy is not the metric you are looking for.

Let’s say there is a one percent chance to find rare elements for car batteries somewhere in the underground, or a one percent chance of discovering some rare disease in a patient, and you want to create a prediction model for this. A model that would always return false (“computer says no”) has an accuracy of 99% in that scenario, but it would still be worthless.

Recall

Recall (a.k.a. Sensitivity) is the fraction of positive observations that have been correctly predicted.

Formula: TP / (TP + FN)

If missing a positive example is important/expensive, you should focus on maximizing recall.

Specificity

Specificity is the recall for the negatives (the ability to find true negatives).

Formula: TN / (TN + FP)

Precision

Precision is fraction of positive predictions that were actually positive.

Formula: TP / (TP + FP)

If false positives are expensive, maximize precision.

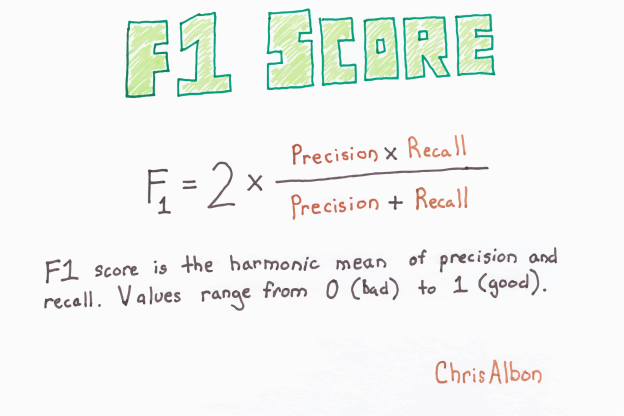

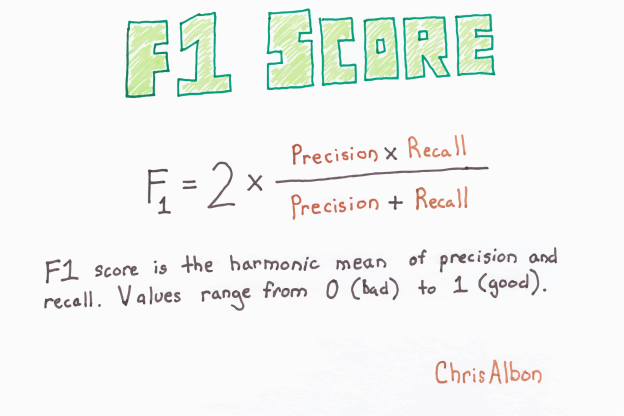

F1 Score

F1 score is the harmonic mean between precision and recall. If one of these two values decreases dramatically, the F1 score also does. F1 score reaches its best value at 1 (perfect precision and recall) and worst at 0.

Formula: 2 * (Precision * Recall) / (Precision + Recall)

F1 score tells you how precise your classifier is (how many instances it classifies correctly) as well as how robust it is (it does not miss significant groups of instances).

Area under the ROC curve

Area Under Curve (AUC) is one of the most widely used metrics for the evaluation of binary classifiers. AUC is the probability that the classifier will rank a randomly chosen positive example higher than a randomly chosen negative example. The referenced curve is called Receiving Operating Characteristic (ROC) and plots the True Positive Rate (TP / (TP + FN)) against the False Positive Rate (FP / (FP + TN)) for all probability thresholds.

Plotting the curve is an expensive operation, but calculating the area under it is not. AUC measures the entire two-dimensional area underneath the entire ROC curve from (0,0) to (1,1). The result is a value between 0.5 (which means that your model behaves like random classifier) and 1 (too good to be true, you’re probably overfitting).

More Metrics

There are more metrics to evaluate and compare binary classifiers, especially the classifiers that return a probability: Logarithmic Loss and Cross-Entropy and more. Not all algorithms in our sample app are in this category, so we decided to ignore these metrics for the moment.

Now is the time to dive into the code.

Battle of the Binary Stars

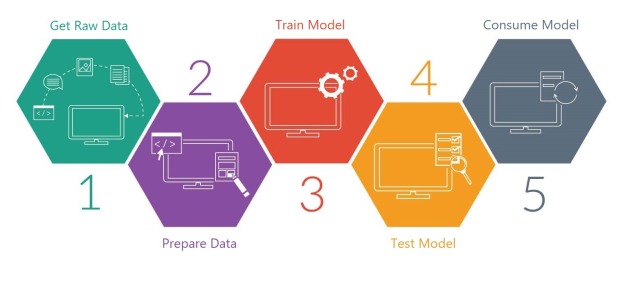

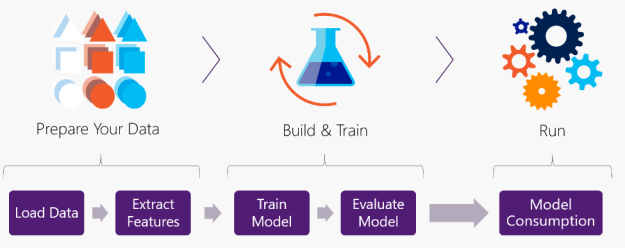

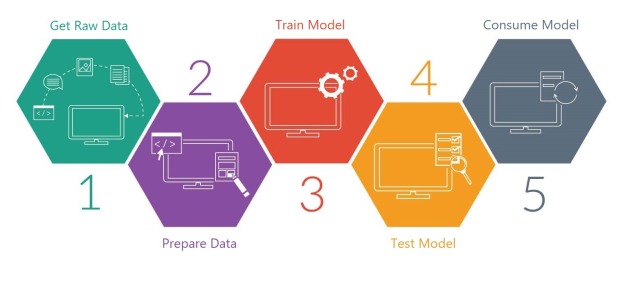

We’re in a traditional Machine Learning use case with reading and preparing the data, training and evaluating the model, and finally using the model for predicting:

Getting the Raw Data

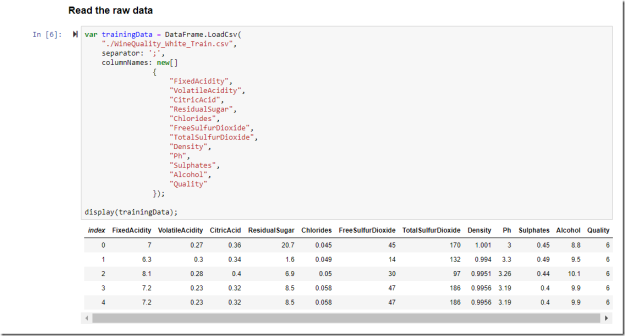

Here’s how the training and testing data sets look like. They list 11 physicochemical and sensory characteristics of Portuguese Vinho Verde white wine, together with a quality score on a scale of 10:

Here’s the corresponding input structure. We decorated the fields with the LoadColumn attribute to facilitate reading the file with one of the built-in ML.NET text readers:

public class BinaryClassificationData

{

[LoadColumn(0)]

public float FixedAcidity;

[LoadColumn(1)]

public float VolatileAcidity;

[LoadColumn(2)]

public float CitricAcid;

[LoadColumn(3)]

public float ResidualSugar;

[LoadColumn(4)]

public float Chlorides;

[LoadColumn(5)]

public float FreeSulfurDioxide;

[LoadColumn(6)]

public float TotalSulfurDioxide;

[LoadColumn(7)]

public float Density;

[LoadColumn(8)]

public float Ph;

[LoadColumn(9)]

public float Sulphates;

[LoadColumn(10)]

public float Alcohol;

[LoadColumn(11), ColumnName("Label")]

public float Label;

}

The models will predict the quality of the wine as a Boolean – good or bad. Here’s how the output structure looks like:

public class BinaryClassificationPrediction

{

[ColumnName("PredictedLabel")]

public bool PredictedLabel;

public int LabelAsNumber => PredictedLabel ? 1 : 0;

}

The file is read with LoadFromTextFile() and stored in memory with Cache(). The latter will improve the training speed for the online learners -the ones that iteratively read a sample- such as SDCA:

var trainData = MLContext.Data.LoadFromTextFile<BinaryClassificationData>(

path: trainingDataPath,

separatorChar: ';',

hasHeader: true);

trainData = MLContext.Data.Cache(trainData);

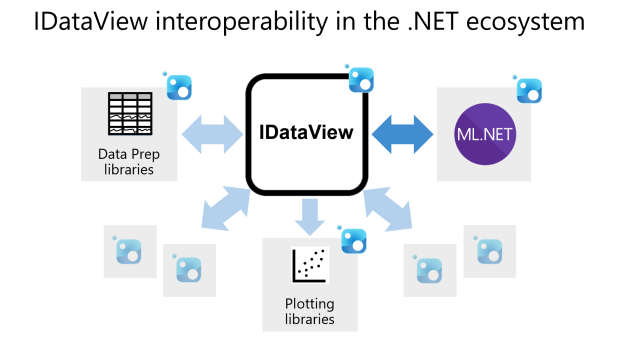

When this code runs, the training data becomes available as an IDataView.

Preparing the data

In ML.NET, the model is defined by a pipeline of components that each apply transformations to the data. Some transformations make the data more representative and compatible with the classifier, other transformations skip the training data with missing or out-of-range values (outliers).

Here are the pipeline steps for our sample:

- fill out missing values for Fixed Acidity,

- translate the numeric quality score to a Boolean,

- create a vector with all feature values, and

- add one of the binary classifiers.

Here’s how that pipeline looks like in C#:

public PredictionModel<BinaryClassificationData, BinaryClassificationPrediction> BuildAndTrain(

string trainingDataPath,

IEstimator<ITransformer> algorithm)

{

IEstimator<ITransformer> pipeline =

MLContext.Transforms.ReplaceMissingValues(

outputColumnName: "FixedAcidity",

replacementMode: MissingValueReplacingEstimator.ReplacementMode.Mean)

.Append(MLContext.FloatToBoolLabelNormalizer())

.Append(MLContext.Transforms.Concatenate("Features",

new[]

{

"FixedAcidity",

"VolatileAcidity",

"CitricAcid",

"ResidualSugar",

"Chlorides",

"FreeSulfurDioxide",

"TotalSulfurDioxide",

"Density",

"Ph",

"Sulphates",

"Alcohol"}))

.Append(algorithm);

// ...

}

Let’s get into the details of some of the preparation steps.

Dealing with missing values

Input data that has missing values for one or more features can ruin the training of your prediction model. ML.NET comes with some useful transforms to deal with this:

Building a Custom Data Transformation Component

The FloatToBoolNormalizer in the previous code snippet is a transformation component that we wrote ourselves. It transforms the numeric quality column from the dataset into a Boolean, without relying on hard-coded thresholds. Boolean is the required data type for the binary classifier’s Label column.

The custom component is a mini pipeline on its own: an IEstimator<ITransformer>. We start with dividing all the values into two bins or buckets (feel free to pronounce it as “bouquets”) of the same size, using NormalizeBinning() from the NormalizationCatalog. After this first transformation the Label column now holds 0 or 1, where the algorithm still expects a Boolean.

To transform the 0-or-1 to a Boolean, we created a CustomMappingFactory with some helper input and output classes, and the appropriate CustomMappingFactory attribute. It is appended with CustomMapping() to the mini pipeline.

The whole component is made reusable in multiple ML.NET pipelines by exposing it as an extension method to MLContext:

public static class MLContextExtensions

{

/// <summary>

/// Divides the numeric Label in two 'buckets' and transforms it to a Boolean.

/// </summary>

public static IEstimator<ITransformer> FloatToBoolLabelNormalizer(this MLContext mLContext)

{

var normalizer = mLContext.Transforms.NormalizeBinning(

outputColumnName: "Label", maximumBinCount: 2);

return normalizer.Append(mLContext.Transforms.CustomMapping(new MapFloatToBool().GetMapping(), "MapFloatToBool"));

}

private class LabelInput

{

public float Label { get; set; }

}

private class LabelOutput

{

public bool Label { get; set; }

public static LabelOutput True = new LabelOutput() { Label = true };

public static LabelOutput False = new LabelOutput() { Label = false };

}

[CustomMappingFactoryAttribute("MapFloatToBool")]

private class MapFloatToBool : CustomMappingFactory<LabelInput, LabelOutput>

{

public override Action<LabelInput, LabelOutput> GetMapping()

{

return (input, output) =>

{

if (input.Label > 0)

output.Label = true;

else

output.Label = false;

};

}

}

}

For another example and more details, check the excellent ML.NET Cook Book.

Training the Models

We now have a training data set in memory, and a pipeline with all necessary transformation components. All we need to do to create the model is call Fit() and turn it into a PredictionEngine:

ITransformer model = pipeline.Fit(trainData);

return new PredictionModel<BinaryClassificationData, BinaryClassificationPrediction>(

MLContext,

model);

The PredictionModel is a reconstruction of a class that disappeared from the API when we we building the sample app. We have kept it in the code as a little helper to keep the algorithm and its prediction engine together:

public PredictionModel(MLContext mlContext, ITransformer transformer)

{

Transformer = transformer;

Engine = mlContext.Model.CreatePredictionEngine<TSrc, TDst>(Transformer);

}

All what’s left to build the models is instantiate each of the four algorithms that we’re going to compare:

Here are the private fields to hold all of these:

private PredictionModel<BinaryClassificationData, BinaryClassificationPrediction> _perceptronBinaryModel;

private PredictionModel<BinaryClassificationData, BinaryClassificationPrediction> _linearSvmModel;

private PredictionModel<BinaryClassificationData, BinaryClassificationPrediction> _logisticRegressionModel;

private PredictionModel<BinaryClassificationData, BinaryClassificationPrediction> _sdcabModel;

And these are the calls to build and train all four models:

_perceptronBinaryModel = await ViewModel.BuildAndTrain(trainingDataLocation, ViewModel.MLContext.BinaryClassification.Trainers.AveragedPerceptron());

_linearSvmModel = await ViewModel.BuildAndTrain(trainingDataLocation, ViewModel.MLContext.BinaryClassification.Trainers.LinearSvm());

_logisticRegressionModel = await ViewModel.BuildAndTrain(trainingDataLocation, ViewModel.MLContext.BinaryClassification.Trainers.LbfgsLogisticRegression());

_sdcabModel = await ViewModel.BuildAndTrain(trainingDataLocation, ViewModel.MLContext.BinaryClassification.Trainers.SdcaLogisticRegression());

We confess that this code is implemented in the MVVM View and we probably deserve a Walk of Atonement for this (“shame … shame … shame”). We just did not know upfront which and how many of the algorithms would work in a UWP context, hence the exploratory code patterns.

Testing the Models

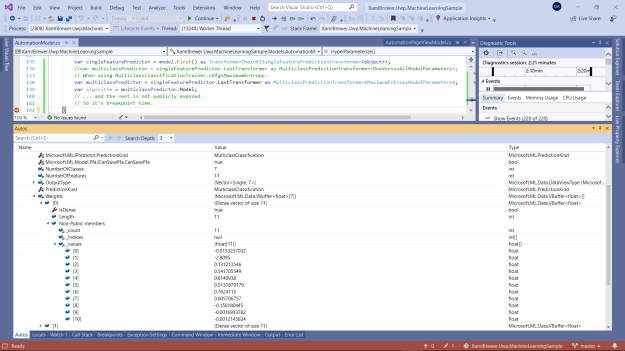

Binary classifiers fall into two categories (pun intended): the ones that return a category class, and the ones that return a probability. In ML.NET both types have another class for their metrics: BinaryClassificationMetrics versus CalibratedBinaryClassificationMetrics. Fortunately there is an inheritance relationship between these two: the calibrated class extends the list of standard binary metrics (such as Accuracy, F1Score, the whole ConfusionMatrix, and the AreaUnderRocCurve) with the probability-related ones (such as LogLoss and Entropy).

The ML.NET API confusingly forces two different calls to trigger the evaluation: Evaluate() versus EvaluateNonCalibrated(). Here are the wrapper methods in the sample app that load and prepare the test data set, generate predictions with Transform(), compare the predicted with the actual results, and finally return the metrics:

public CalibratedBinaryClassificationMetrics Evaluate(

PredictionModel<BinaryClassificationData, BinaryClassificationPrediction> model,

string testDataLocation)

{

var testData = MLContext.Data.LoadFromTextFile<BinaryClassificationData>(

path: testDataLocation,

separatorChar: ';',

hasHeader: true);

var scoredData = model.Transformer.Transform(testData);

return MLContext.BinaryClassification.Evaluate(scoredData);

}

public BinaryClassificationMetrics EvaluateNonCalibrated(

PredictionModel<BinaryClassificationData, BinaryClassificationPrediction> model,

string testDataLocation)

{

var testData = MLContext.Data.LoadFromTextFile<BinaryClassificationData>(

path: testDataLocation,

separatorChar: ';',

hasHeader: true);

var scoredData = model.Transformer.Transform(testData);

return MLContext.BinaryClassification.EvaluateNonCalibrated(scoredData);

}

Here’s the call in the View that fetches these metrics:

BinaryClassificationMetrics metrics = await ViewModel.EvaluateNonCalibrated(_perceptronBinaryModel, _testDataPath);

Again, there are some violations of the MVVM pattern here, but we did not know which and how many of these metrics would end up in the diagram.

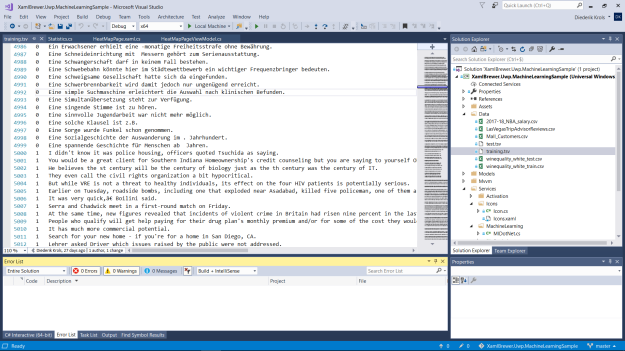

Here’s how the metrics are visualized with a bar chart in the sample app:

The lack of quality in the two models on the left is clearly noticeable. The good wines do not seem to be linearly separable from the bad wines. It seems that logistic regression is the way to go in this particular scenario. The dataset is also not big enough to justify SDCA, the last algorithm is not better than the third, but is clearly a lot slower.

Saving the Models

The ML.NET API could not be more straightforward: use Model.Save() to … save the model:

public void Save(

PredictionModel<BinaryClassificationData, BinaryClassificationPrediction> model,

string modelName)

{

var storageFolder = ApplicationData.Current.LocalFolder;

string modelPath = Path.Combine(storageFolder.Path, modelName);

MLContext.Model.Save(

model: model.Transformer,

inputSchema: null,

filePath: modelPath);

}

Consuming the Models

We assume that in most cases you’ll be interested at runtime in assessing the quality of just a limited number of wines, so we built the prediction method around PredictionEngine.Predict() – the call to generate a single prediction:

public IEnumerable<BinaryClassificationPrediction> Predict(

PredictionModel<BinaryClassificationData, BinaryClassificationPrediction> model,

IEnumerable<BinaryClassificationData> data)

{

foreach (BinaryClassificationData datum in data)

yield return model.Engine.Predict(datum);

}

The sample app uses this method to get predictions from each of the 4 models for the first 50 wines in the test data set:

var testDataLocation = await MlDotNet.FilePath(@"ms-appx:///Data/winequality_white_test.csv");

var tests = await ViewModel.GetSample(testDataLocation);

var size = 50;

var data = tests.ToList().Take(size);

var perceptronPrediction = (await ViewModel.Predict(_perceptronBinaryModel, data)).ToList();

var linearSvmPrediction = (await ViewModel.Predict(_linearSvmModel, data)).ToList();

var logisticRegressionPrediction = (await ViewModel.Predict(_logisticRegressionModel, data)).ToList();

var sdcabPrediction = (await ViewModel.Predict(_sdcabModel, data)).ToList();

This code gets called when you click the ‘View Disagreements’ button. Observe that the models –even from the same family (linear or logistic)- disagree on quite some samples.

The table in the center of the page lists the differences:

It is clear that the linear models are the drama queens in this setup. They’re too easily overoptimistic or overpessimistic on the quality of the wines.

All the code and more

The UWP sample app lives here on GitHub. It hosts a lot more ML.NET scenarios than what we covered in this article. Look at the code, play with the code. If it sparks joy, please return the favor and give the repo a star!

Enjoy!

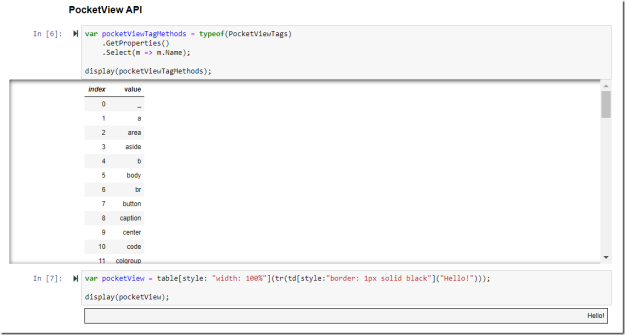

![]() on it). The easiest way to explore the code and the rendered results is via nbViewer.

on it). The easiest way to explore the code and the rendered results is via nbViewer.